0

Notifications Mark All Read

- Login

- Get Prime

Critical Section Problem in Operating System (OS)

Critical Section Problem

On this page we will discuss about critical section problem in operating system (OS) .Critical section problem is a classical computer science problem which we will discuss on this page.

Critical Section Problem in Operating system

Critical Section problem is a classic computer science problem in many banking systems, where there are many shared resources like memory, I/O etc. Concurrent access may override changes made by other process running in parallel.

We already know about Critical section of code from Process synchronization post. Critical section is a segment of code in which process changes common variable, updates file etc.

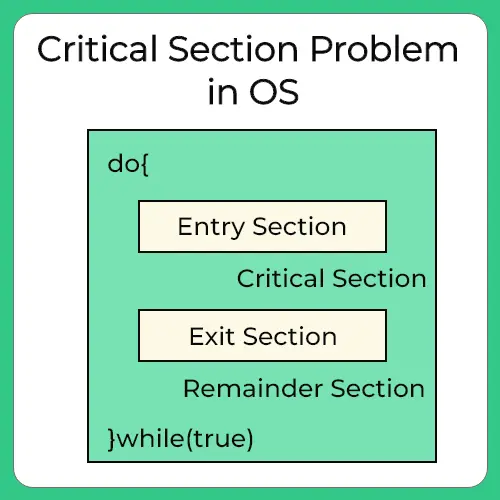

The Critical Section Problem is to design a protocol which processes use to cooperate .To enter into critical section, a process requests permission through entry section code. After critical section code is executed, then there is exit section code, to indicate the same.

Constituents of Critical Section

The main blocks of process are –

- Entry Section – To enter the critical section code, a process must request permission. Entry Section code implements this request.

- Critical Section – This is the segment of code where process changes common variables, updates a table, writes to a file and so on. When 1 process is executing in its critical section, no other process is allowed to execute in its critical section.

- Exit Section – After the critical section is executed , this is followed by exit section code which marks the end of critical section code.

- Remainder Section – The remaining code of the process is known as remaining section.

Example of Negative Effect

When several threads (or processes) share data, running in parallel on different cores , then changes made by one process may override changes made by another process running parallel. Resulting in inconsistent data. So, this requires processes to be synchronized, handling system resources and processes to avoid such situation is known as Process Synchronization.

CLASSIC BANKING EXAMPLE –

- Consider your bank account has 5000$.

- You try to withdraw 4000$ using net banking and simultaneously try to withdraw via ATM too.

- For Net Banking at time t = 0ms bank checks you have 5000$ as balance and you’re trying to withdraw 4000$ which is lesser than your available balance. So, it lets you proceed further and at time t = 1ms it connects you to server to transfer the amount

- Imagine, for ATM at time t = 0.5ms bank checks your available balance which currently is 5000$ and thus let’s you enter ATM password and withdraw amount.

- At time t = 1.5 ms ATM dispenses the cash of 4000$ and at time t = 2 net banking transfer is complete of 4000$.

EFFECT ON THE SYSTEM

Now, due to concurrent access and processing time that computer takes in both ways you were able to withdraw 3000$ more than your balance. In total 8000$ were taken out and balance was just 5000$.

Critical Section Problem conditions

A critical solution problem satisfies 3 things –

- Mutual Exclusion must be preserved.

- Progress Requirement must be satisfied.

- Bounded waiting time requirement is met.

Let’s look at each of these in detail –

- Mutual Exclusion is preserved – If a process P1 is executing in its critical section, no other process can execute in critical section.

- Process or Progress Requirement must be satisfied – If no process is executing in its critical section, then the decision as to which process will go next into executing its critical section code is decided by only those process which are in entry section, that is, not before it. That is the process which are in remainder section can not take part in this decision. Also, this selection has to be made in certain time, it can’t be postponed indefinitely.

- Bounded Waiting time requirement is met – If a process A has made a request to enter its critical section, then there is a limit on the number of times , other processes go before into their critical section. So, waiting time, as a result is bonded. After a certain time, the process gets to be in critical Section, doesn’t wait indefinitely.

Advanced Topics

To handle critical section is Operating System, There are 2 ways –

- Preemptive Kernel

- Non Preemptive Kernel

Preemptive Kernel

- Allows process to be preempted while it’s running in kernel mode.

- Can be prone to race around condition, if poorly designed. So, must be carefully designed .

- Difficult to Design for SMP Architecture as in this environment, it’s possible for 2 Kernel -Mode processes to run simultaneously on 2 different processes.

- It’s more responsible, as less risk for any process to run indefinitely.

- Suitable for Real Time Programming.

What is Race Around Condition ?

If many kernel processes in OS, it may lead to race around condition.

Eg – Consider a kernel data structure that maintains a list of all open files in system. List is modified if a new file is opened or closed. If 2 process simultaneously try to open files , it may separate updates to list leading to race around condition

Non Preemptive Kernel –

- Does not allow a process running in Kernel Mode to be preempted, that is stopped. Only after the process exits the Kernel Mode by self, will the next process be given the kernel.

- Free from Race Around Condition as only 1 process is executed at a time in kernel.

The software based solution to critical section problem is Peterson’s Algorithm, Semaphores, Monitors etc

We will discuss all of these in details in the future posts in the series.

The hardware based solution to critical section is exclusive access to memory location, atomic swap etc.

Prime Course Trailer

Related Banners

Get PrepInsta Prime & get Access to all 200+ courses offered by PrepInsta in One Subscription

Get over 200+ course One Subscription

Courses like AI/ML, Cloud Computing, Ethical Hacking, C, C++, Java, Python, DSA (All Languages), Competitive Coding (All Languages), TCS, Infosys, Wipro, Amazon, DBMS, SQL and others

0

0

Login/Signup to comment