Least Recently Used (LRU) Algorithm

Introduction to Page Replacement Algorithms

Page replacement algorithms are essential components of operating systems that manage the allocation and deallocation of memory pages.

These algorithms decide which pages to keep in the main memory and which to evict when new pages need to be loaded. The efficiency of these algorithms significantly impacts system performance.

Understanding the LRU Algorithm

The Least Recently Used (LRU) algorithm is a popular page replacement strategy that operates on the principle of locality of reference. It assumes that if a page is referenced recently, it is likely to be referenced again in the near future.

To implement the LRU algorithm, the system maintains a list or a queue of pages in the main memory. When a page needs to be replaced, the algorithm selects the page that has the earliest access time, indicating that it has been the least recently used. This selected page is then swapped out with the new page.

Implementing LRU Algorithm in Operating Systems

Operating systems often implement the LRU algorithm to manage virtual memory and disk caching. The LRU algorithm can be implemented using various data structures such as linked lists, hash tables, or priority queues.

These data structures enable efficient tracking of page access times and facilitate the selection of the least recently used page for replacement.

LRU Algorithm:

1. Initialize an empty cache of size N (number of frames) and an empty queue (used to keep track of the order of page references).

2. While processing page references:

a. Read the next page reference.

b. If the page is present in the cache (cache hit), move the corresponding entry to the front of the queue.

c. If the page is not present in the cache (cache miss):

i. If the cache is full (all frames are occupied):

1. Remove the least recently used page (the page at the end of the queue).

2. Add the new page to the cache and insert it at the front of the queue.

ii. If the cache has an empty frame:

1. Add the new page to the cache and insert it at the front of the queue.

d. Repeat steps (a) to (c) until all page references are processed.

3. At the end of processing page references, the cache will contain the most frequently used pages.

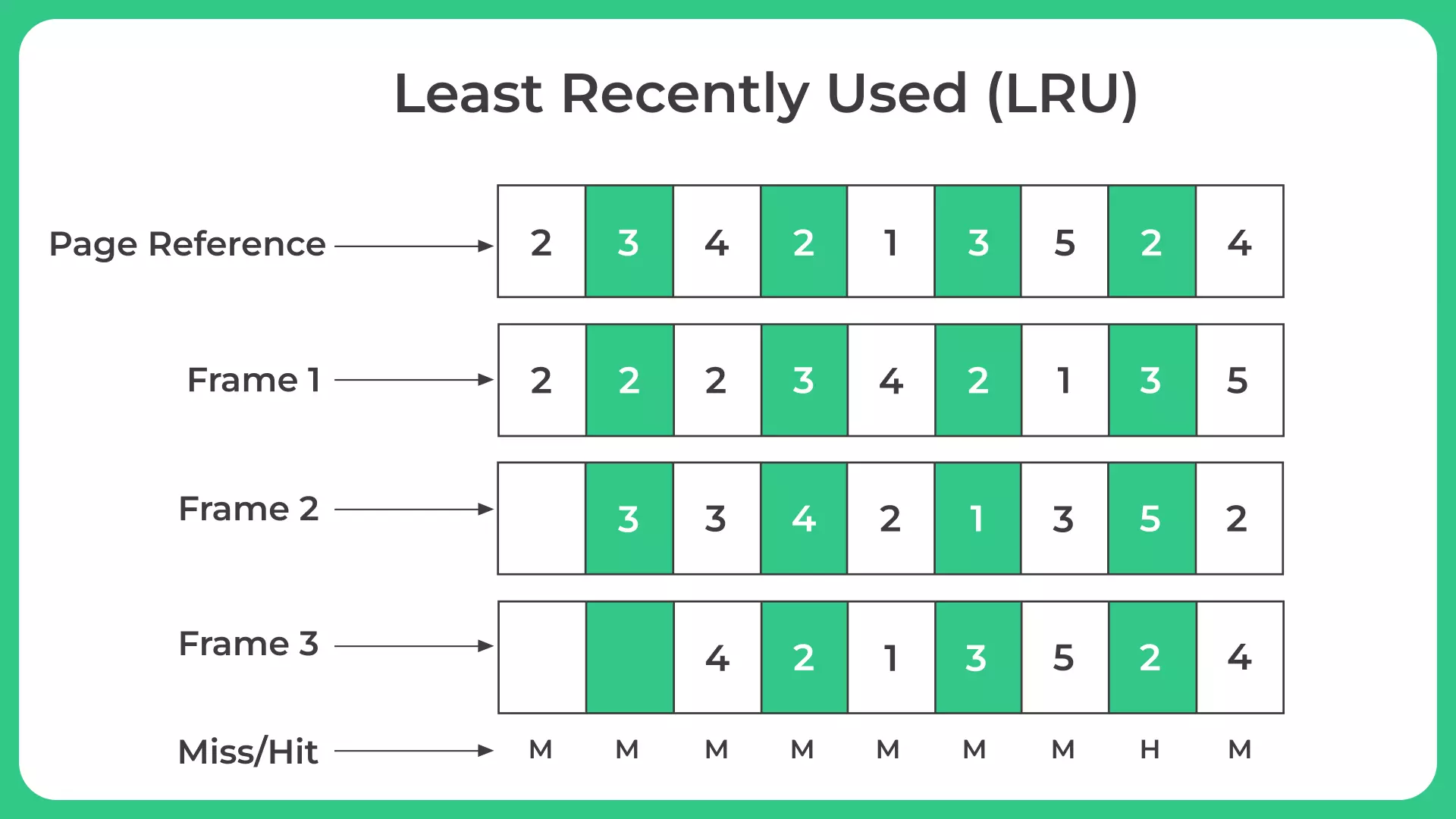

Example of Least Recently Used Page Replacement Algorithm

- Let’s consider a simple example to illustrate how the LRU algorithm works.

- Suppose we have a main memory that can hold only three pages: A, B, and C.

- The memory initially contains pages A and B.

- Now, when a new page D is requested, the LRU algorithm determines that page C, which hasn’t been accessed for the longest time, should be replaced with page D.

Initial Memory State: A B _ Memory State after Requesting D: A B D

Implemtation of Least Recently Used (LRU) Algorithm

#include <iostream>

#include <list>

#include <unordered_map>

class LRUCache {

private:

int capacity;

std::list lruList;

std::unordered_map::iterator> cache;

public:

LRUCache(int capacity) {

this->capacity = capacity;

}

int get(int key) {

if (cache.find(key) == cache.end()) {

return -1; // Key not found in cache

}

// Move the accessed key to the front of the LRU list

lruList.splice(lruList.begin(), lruList, cache[key]);

return *cache[key]; // Return the value associated with the key

}

void put(int key, int value) {

if (cache.find(key) != cache.end()) {

// Key already exists in the cache

// Move the key to the front of the LRU list

lruList.splice(lruList.begin(), lruList, cache[key]);

*cache[key] = value; // Update the value

} else {

if (lruList.size() == capacity) {

// Cache is full, remove the least recently used key

int lruKey = lruList.back();

cache.erase(lruKey);

lruList.pop_back();

}

// Insert the new key-value pair into the cache

lruList.push_front(key);

cache[key] = lruList.begin();

*cache[key] = value;

}

}

};

int main() {

LRUCache cache(2);

cache.put(1, 1);

cache.put(2, 2);

std::cout << cache.get(1) << std::endl; // Output: 1

cache.put(3, 3);

std::cout << cache.get(2) << std::endl; // Output: -1

cache.put(4, 4);

std::cout << cache.get(1) << std::endl; // Output: -1

std::cout << cache.get(3) << std::endl; // Output: 3

std::cout << cache.get(4) << std::endl; // Output: 4

return 0;

}

import java.util.HashMap;

import java.util.Map;

class LRUCache {

private int capacity;

private Map<Integer, Node> cache;

private Node head;

private Node tail;

class Node {

int key;

int value;

Node prev;

Node next;

public Node(int key, int value) {

this.key = key;

this.value = value;

}

}

public LRUCache(int capacity) {

this.capacity = capacity;

cache = new HashMap<>();

head = new Node(0, 0);

tail = new Node(0, 0);

head.next = tail;

tail.prev = head;

}

private void addToFront(Node node) {

node.next = head.next;

node.next.prev = node;

head.next = node;

node.prev = head;

}

private void removeNode(Node node) {

node.prev.next = node.next;

node.next.prev = node.prev;

}

private void moveToHead(Node node) {

removeNode(node);

addToFront(node);

}

public int get(int key) {

if (cache.containsKey(key)) {

Node node = cache.get(key);

moveToHead(node);

return node.value;

}

return -1;

}

public void put(int key, int value) {

if (cache.containsKey(key)) {

Node node = cache.get(key);

node.value = value;

moveToHead(node);

} else {

if (cache.size() == capacity) {

Node toRemove = tail.prev;

removeNode(toRemove);

cache.remove(toRemove.key);

}

Node newNode = new Node(key, value);

addToFront(newNode);

cache.put(key, newNode);

}

}

}

public class Main {

public static void main(String[] args) {

LRUCache cache = new LRUCache(2);

cache.put(1, 1);

cache.put(2, 2);

System.out.println(cache.get(1)); // Output: 1

cache.put(3, 3);

System.out.println(cache.get(2)); // Output: -1

cache.put(4, 4);

System.out.println(cache.get(1)); // Output: -1

System.out.println(cache.get(3)); // Output: 3

System.out.println(cache.get(4)); // Output: 4

}

}

from collections import OrderedDict

class LRUCache:

def __init__(self, capacity):

self.capacity = capacity

self.cache = OrderedDict()

def get(self, key):

if key not in self.cache:

return -1

# Move the accessed key to the end to mark it as the most recently used

self.cache.move_to_end(key)

return self.cache[key]

def put(self, key, value):

if key in self.cache:

# Key already exists, move it to the end and update the value

self.cache.move_to_end(key)

self.cache[key] = value

if len(self.cache) > self.capacity:

# Remove the least recently used key

self.cache.popitem(last=False)

# Example usage

cache = LRUCache(2)

cache.put(1, 1)

cache.put(2, 2)

print(cache.get(1)) # Output: 1

cache.put(3, 3)

print(cache.get(2)) # Output: -1

cache.put(4, 4)

print(cache.get(1)) # Output: -1

print(cache.get(3)) # Output: 3

print(cache.get(4)) # Output: 4

1 -1 -1 3 4

It creates a cache object with a capacity of 2 and performs a series of put and get operations on it.

- First, it puts key-value pairs (1, 1) and (2, 2) into the cache.

- Then, it retrieves the value associated with key 1 using the get() method, which returns 1.

- Next, it puts a new key-value pair (3, 3) into the cache, which exceeds the capacity.

- When it tries to retrieve the value associated with key 2, it returns -1 since that entry was evicted due to reaching the cache’s capacity.

- It puts a new key-value pair (4, 4) into the cache, again exceeding the capacity and evicting the least recently used entry.

- Finally, it retrieves the values associated with keys 1, 3, and 4, which return -1, 3, and 4 respectively.

- The provided code implements the Least Recently Used (LRU) cache algorithm in Java.

- The LRUCache class uses a combination of a HashMap and a doubly linked list to store key-value pairs.

- The get() method retrieves the value associated with a key and moves the corresponding node to the front of the list.

- The put() method inserts or updates a key-value pair, removing the least recently used item if the cache is full.

- The code demonstrates the usage of the LRUCache class with example put and get operations.

In the example usage, a cache object is created with a capacity of 2. Key-value pairs are added using the put() method, and values are retrieved using the get() method. The output of the get() method for each key is printed.

The output of the program will be:

- 1 (since the value associated with key 1 is present)

- -1 (since key 2 was evicted from the cache)

- -1 (since key 1 was evicted from the cache)

- 3 (the value associated with key 3)

- 4 (the value associated with key 4)

The code demonstrates how the LRU cache implementation using OrderedDict in Python ensures that the most recently used items are moved to the end of the cache, while evicting the least recently used items when the cache exceeds its capacity.

The LRU algorithm offers several benefits and finds applications in various systems:

Efficient Cache Management: The LRU algorithm is commonly used in CPU caches to improve cache hit rates and reduce memory latency.

Database Management Systems: LRU is used in database systems to manage the buffer pool, ensuring that frequently accessed data remains in memory.

Web Caching: LRU is employed in web caching mechanisms to store and retrieve frequently accessed web pages, enhancing browsing speed.

While the LRU algorithm offers significant advantages, it also faces certain challenges and limitations:

Implementation Complexity: Implementing the LRU algorithm efficiently requires careful design and consideration of data structures, which can be complex.

High Overhead: Tracking page access times and maintaining the LRU list incurs additional overhead, which can impact overall system performance.

Unequal Access Patterns: In scenarios where page access patterns deviate significantly from the assumption of locality, the LRU algorithm may not perform optimally.

Comparing LRU with Most Recently Used Algorithm

- In contrast to the LRU algorithm, the Most Recently Used (MRU) algorithm replaces the page that has been accessed most recently.

- While LRU focuses on discarding the least recently used page, MRU prioritizes the most recently used page for replacement.

- Both LRU and MRU have their advantages and use cases. LRU tends to perform better in scenarios where the locality of reference is high, as it maximizes the cache hit rate by keeping frequently accessed pages in memory.

Conclusion

The Least Recently Used (LRU) algorithm is a powerful page replacement strategy that optimizes memory management in operating systems.

By evicting the least recently used page, it improves cache hit rates and enhances system performance. Understanding the LRU algorithm’s principles, benefits, and limitations is crucial for system designers and software developers.

Read More:

- Memory Management Introduction

- Partition Allocation Method

- Buddy- System Allocator

- Paging

- Types of Paging

- Fragmentation

- Mapping Virtual address to Physical Address.

- Virtual Memory

- Demand Paging

- Implementation of Demand paging and page fault

- Segmentation

- Page Replacement Algorithms

- Thrashing

- Belady’s Anomaly

- Static vs Dynamic Loading

- Static vs Dynamic Linking

- Swapping

- Translational Look Aside Buffer

- Process Address Space

- Difference between Segmentation and Paging

Prime Course Trailer

Related Banners

Get PrepInsta Prime & get Access to all 200+ courses offered by PrepInsta in One Subscription

Get over 200+ course One Subscription

Courses like AI/ML, Cloud Computing, Ethical Hacking, C, C++, Java, Python, DSA (All Languages), Competitive Coding (All Languages), TCS, Infosys, Wipro, Amazon, DBMS, SQL and others

Login/Signup to comment