Process Synchronization in Operating System (OS)

Process Synchronization

Process Synchronization in Operating System (OS) is a fundamental concept that ensures multiple processes can execute simultaneously without interfering with each other. On this page, we will discuss the importance, techniques, and challenges of process synchronization in operating systems. It is a mechanism by which memory and resources are shared efficiently among different processes while maintaining data consistency and avoiding conflicts.

On this page we will discuss about process synchronization in operating system (OS) . It is a process in operating system by which memory and resources are shared by different process.

Process Synchronization in Operating System

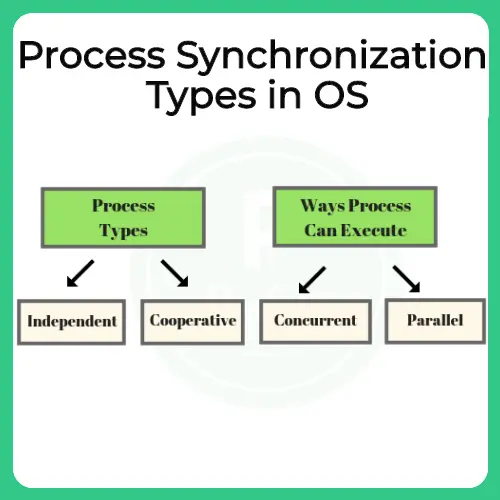

Process synchronization is a fundamental concept in operating systems that deals with coordinating the execution of multiple processes to ensure that they operate correctly when accessing shared resources or data. It is especially important in multi processing environments, where processes might be running concurrently or in parallel.

There are two main ways processes can execute in a system:

1. Concurrent Execution

- In concurrent execution, multiple processes appear to run at the same time, but in reality, the CPU executes only one instruction at a time from one process.

- The CPU scheduler rapidly switches between processes, giving the illusion of simultaneous execution. This switching is known as context switching.

- At any moment, the CPU executes a single instruction from one process and then moves to another process, interrupting the first.

- Data inconsistency due to race conditions.

- Critical section problems when multiple processes access shared memory.

- Need for proper synchronization tools like semaphores or mutexes.

2. Parallel Execution

- In parallel execution, two or more instructions from different processes are executed simultaneously using multiple CPU cores.

- Each core handles a separate process or thread, allowing true parallelism.

- This execution model is used in multi core processors, which are now standard in modern computing systems.

- Requires careful synchronization to prevent data corruption.

- Deadlocks, livelocks, and starvation need to be avoided.

Prepare for Interview with us:

Why is Process Synchronisation Important

When several threads (or processes) share data, running in parallel on different cores , then changes made by one process may override changes made by another process running parallel. Resulting in inconsistent data. So, this requires processes to be synchronized, handling system resources and processes to avoid such situation is known as Process Synchronization.

Classic Banking Example –

- Consider your bank account has 5000$.

- You try to withdraw 4000$ using net banking and simultaneously try to withdraw via ATM too.

- For Net Banking at time t = 0ms bank checks you have 5000$ as balance and you’re trying to withdraw 4000$ which is lesser than your available balance. So, it lets you proceed further and at time t = 1ms it connects you to server to transfer the amount

- Imagine, for ATM at time t = 0.5ms bank checks your available balance which currently is 5000$ and thus let’s you enter ATM password and withdraw amount.

- At time t = 1.5 ms ATM dispenses the cash of 4000$ and at time t = 2 net banking transfer is complete of 4000$.

Effect on the system

Now, due to concurrent access and processing time that computer takes in both ways you were able to withdraw 3000$ more than your balance. In total 8000$ were taken out and balance was just 5000$.

How to solve this Situation

To avoid such situations process synchronisation is used, so another concurrent process P2 is notified of existing concurrent process P1 and not allowed to go through as there is P1 process which is running and P2 execution is only allowed once P1 completes.

Process Synchronization also prevents race around condition. It’s the condition in which several processes access and manipulate the same data. In this condition, the outcome of the execution depends upon the particular order in which access takes place.

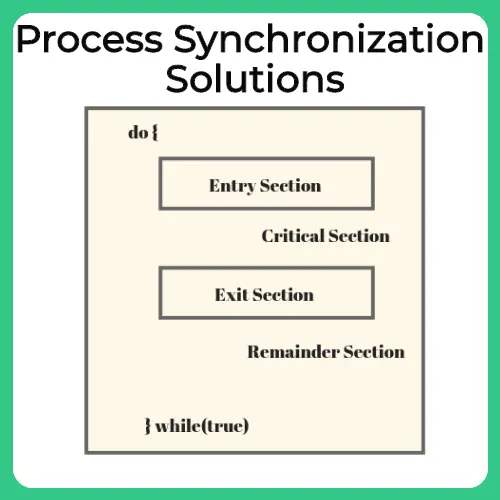

The main blocks of process are –

- Entry Section – To enter the critical section code, a process must request permission. Entry Section code implements this request.

- Critical Section – This is the segment of code where process changes common variables, updates a table, writes to a file and so on. When 1 process is executing in its critical section, no other process is allowed to execute in its critical section.

- Exit Section – After the critical section is executed , this is followed by exit section code which marks the end of critical section code.

- Remainder Section – The remaining code of the process is known as remaining section.

- Important – Visit the Critical Section page here, to read in detail about the Critical Section Problem

Solution for Process Synchronization

Mutual Exclusion

Processes have to abide to this rule under this, only one process at a time is allowed to operate inside critical section. All cooperating processes should follow this.

Many hacks on banking system try to inject code inside this to break this rule. So this is one of the most important part of concurrent process codes.

Progress

As the name suggests, the system must promote progress and maximum utilisation of the system. If there is no thread in the critical section then, and there are threads waiting for critical section utilisation. Then they must be allowed immediately to access critical section.

Bounded Waiting

If a process P1 has asked for entry in its critical section. There is a limit on the number of other processes that can enter critical section. Since if a large number of processes are allowed to enter critical section. Then the process P1 may starve forever to gets its entry.

To further solve such situations many more solutions are available which will be discussed in detail in further posts –

- Semaphores

- Critical Section

- Test and Set

- Peterson’s Solution

- Mutex

The following are not required to be known as of now, read them later, we will cover these in our future posts –

- Synchronization Hardware

- Mutex Locks

Also Geeks4Geeks confuses by introducing Peterson’s Solution, Test And Set and semaphores in the process Synchronization post. Please ignore it.

Wrapping Up

Process synchronization is a crucial aspect of operating systems that ensures the correct execution of concurrent and parallel processes when accessing shared resources. Without proper synchronization, systems are prone to data inconsistency and race conditions. Techniques like mutual exclusion, progress, and bounded waiting help manage these issues effectively. Understanding and implementing synchronization mechanisms is essential for maintaining data integrity and optimal system performance.

FAQs

Without synchronization, concurrent processes may interfere with each other, leading to data corruption and unpredictable system behavior.

Yes, improper synchronization can cause delays or deadlocks, while efficient synchronization ensures smooth and optimized process execution.

No, even in single core systems, synchronization is necessary due to context switching and shared resource access by multiple processes.

No, they are also used in distributed systems, real time systems, and multithreaded applications to manage shared resources safely.

Login/Signup to comment