Naive Bayes Classifiers

Introduction to Naive Bayes Classifiers in Machine Learning

The Naive Bayes Classifiers is one of the simplest algorithms in machine learning, yet it’s known for delivering strong and reliable results. Don’t let its simplicity fool you – it’s widely used in practical applications, especially when it comes to dealing with text data, like sorting emails or filtering spam.

In this article, we’ll walk you through everything you need to know about Naive Bayes. We’ll start with its basic idea, explain how it works, and talk about where it’s commonly used. We’ll also highlight its benefits and show you a real-world example of how it’s applied in text classification tasks.

What is Naive Bayes?

Naive Bayes is a family of probabilistic algorithms based on Bayes’ Theorem. The term “naive” stems from the algorithm’s assumption that all features used in the classification are independent of each other – a simplification that rarely holds true in real-world scenarios.

Despite this seemingly unrealistic assumption, Naive Bayes classifiers perform remarkably well in practice.

Bayes’ Theorem provides a way to update the probability estimates of a hypothesis as more evidence or information becomes available. Mathematically, it is expressed as:

P(A/B) = {P(B/A) x P(A)} / P(B)

Where:

- P(A|B) is the posterior probability: the probability of hypothesis A given the evidence B.

- P(B|A) is the likelihood: the probability of evidence B given that A is true.

- P(A) is the prior probability: the initial probability of hypothesis A.

- P(B) is the marginal probability: the total probability of evidence B.

Understanding Naive Bayes and Machine Learning

In machine learning, we aim to build models that can learn from data and make predictions. Naive Bayes is a supervised learning algorithm, meaning it learns from labeled data (data with correct answers).

Here’s how it works:

- It learns the probabilities of different features (like words in an email) belonging to different classes (like spam or not spam).

- Then, when it sees new data, it calculates the likelihood of that data belonging to each class and picks the class with the highest probability.

Where is Naive Bayes Used?

Naive Bayes Classifier is used in a wide range of applications. Here are a few common ones:

1. Spam Detection

Classifies emails as spam or not based on words used in the subject or body.

2. Sentiment Analysis

Used to analyze customer reviews or tweets to understand whether the sentiment is positive, negative, or neutral.

3. Document Classification

Groups documents into categories like news, sports, politics, tech, etc.

4. Medical Diagnosis

Helps in predicting diseases based on symptoms.

5. Face Recognition

Used in some basic face recognition systems.

6. Recommendation Systems

Sometimes used in hybrid systems to recommend products or content.

Understanding Naive Bayes Classifier

Let’s understand how a Naive Bayes classifier works with a simple example:

Example:

Imagine we have data about whether someone plays cricket based on the weather. The data has two features:

- Weather: Sunny, Rainy

- Windy: Yes, No

And the target variable: Plays Cricket: Yes or No.

The classifier will:

- Calculate the probability of playing cricket based on the weather and windy condition.

- Multiply the probabilities together assuming the features are independent (the naive assumption).

- Compare the probabilities for “Yes” and “No” and pick the higher one.

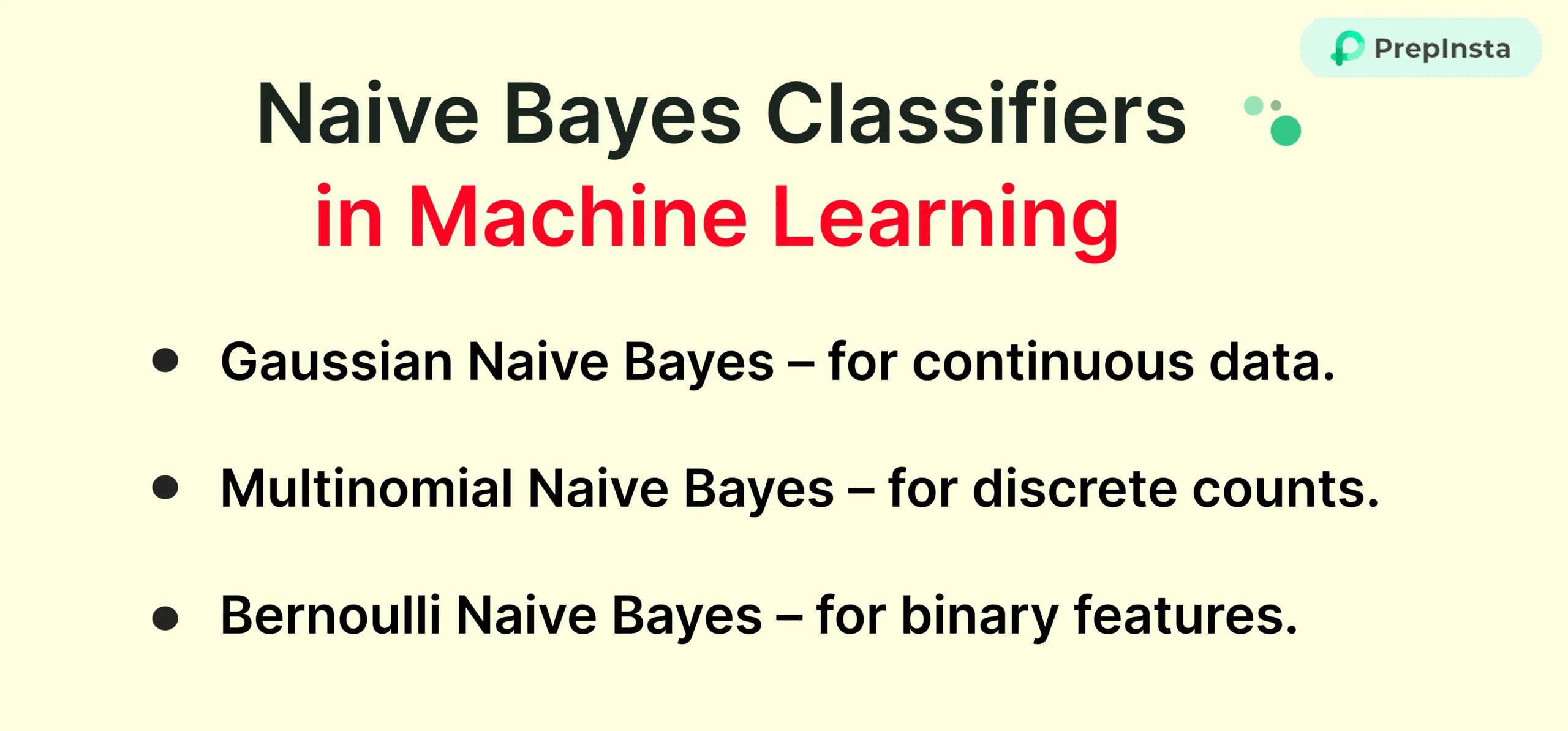

Types of Naive Bayes Classifiers

There are three common types of Naive Bayes models:

- Gaussian Naive Bayes

- Used when features follow a normal distribution (e.g., height, weight).

- Multinomial Naive Bayes

- Used for discrete counts. Ideal for text classification (e.g., number of times a word appears in a document).

- Bernoulli Naive Bayes

- Similar to Multinomial but works with binary/boolean features (e.g., word present or not).

Advantages of Naive Bayes Classifier

Naive Bayes comes with several benefits:

- Simple and Easy to Implement

Even with basic coding knowledge, you can implement Naive Bayes using Python libraries like Scikit-learn. - Fast and Efficient

Performs well even with large datasets. Training and prediction are very quick. - Works Well with Text Data

Ideal for Natural Language Processing tasks like spam detection and sentiment analysis. - Performs Well Even with Small Data

Requires less training data compared to more complex algorithms. - Not Prone to Overfitting

Because of its simplicity, it avoids overfitting in many cases.

Use Case – Text Classification (Spam Detection)

Let’s walk through a real-world example of text classification using Naive Bayes.

Problem: Classify an email as spam or not spam.

Steps:

- Collect Data

Collect a dataset of emails labeled as spam or not spam. - Preprocess Text

Remove stopwords, punctuation, and convert text into numerical features (like word counts or TF-IDF). - Train the Model

Use a Multinomial Naive Bayes classifier to train on the labeled data. - Make Predictions

When a new email comes in, the model calculates the probability of it being spam or not and classifies accordingly.

Example with Python:

from sklearn.naive_bayes import MultinomialNB from sklearn.feature_extraction.text import CountVectorizer emails = ["Win money now!", "Hi, how are you?", "Exclusive offer just for you!", "Meeting at 3 PM"] labels = [1, 0, 1, 0] # 1 for spam, 0 for not spam vectorizer = CountVectorizer() features = vectorizer.fit_transform(emails) model = MultinomialNB() model.fit(features, labels) test_email = ["Free cash prize"] test_features = vectorizer.transform(test_email) print(model.predict(test_features)) # Output: [1] -> spam

Limitations of Naive Bayes

Although Naive Bayes is great, it’s not perfect:

- Independence Assumption is Unrealistic: Features are rarely independent in real data.

- Zero Probability Issue: If a word in the test data wasn’t seen in training, it may assign zero probability.

- Not Suitable for Complex Relationships: Can’t capture interactions between features.

To handle these, we use techniques like Laplace Smoothing or switch to more advanced models.

To Wrap it Up

Naive Bayes may seem too simple at first glance, but don’t let that fool you. Its speed, simplicity, and surprising accuracy make it a solid choice for many applications, especially when working with text data. Whether you’re a beginner or a pro in machine learning, understanding Naive Bayes can give you a strong foundation.

It’s a perfect starting point to enter the world of classification algorithms.

FAQ's

Yes, it’s extremely fast and efficient, even with large datasets.

It depends on the dataset. Naive Bayes works better with text and when feature independence holds. Decision Trees or SVMs may work better for complex patterns.